Virtual Private Tecton Architecture

The Virtual Private Tecton Architecture combines the best aspects of a managed SaaS application, dedicated single-tenant infrastructure, and customer data ownership.

This guide explains the concepts behind Virtual Private Tecton, including how feature data is processed and stored.

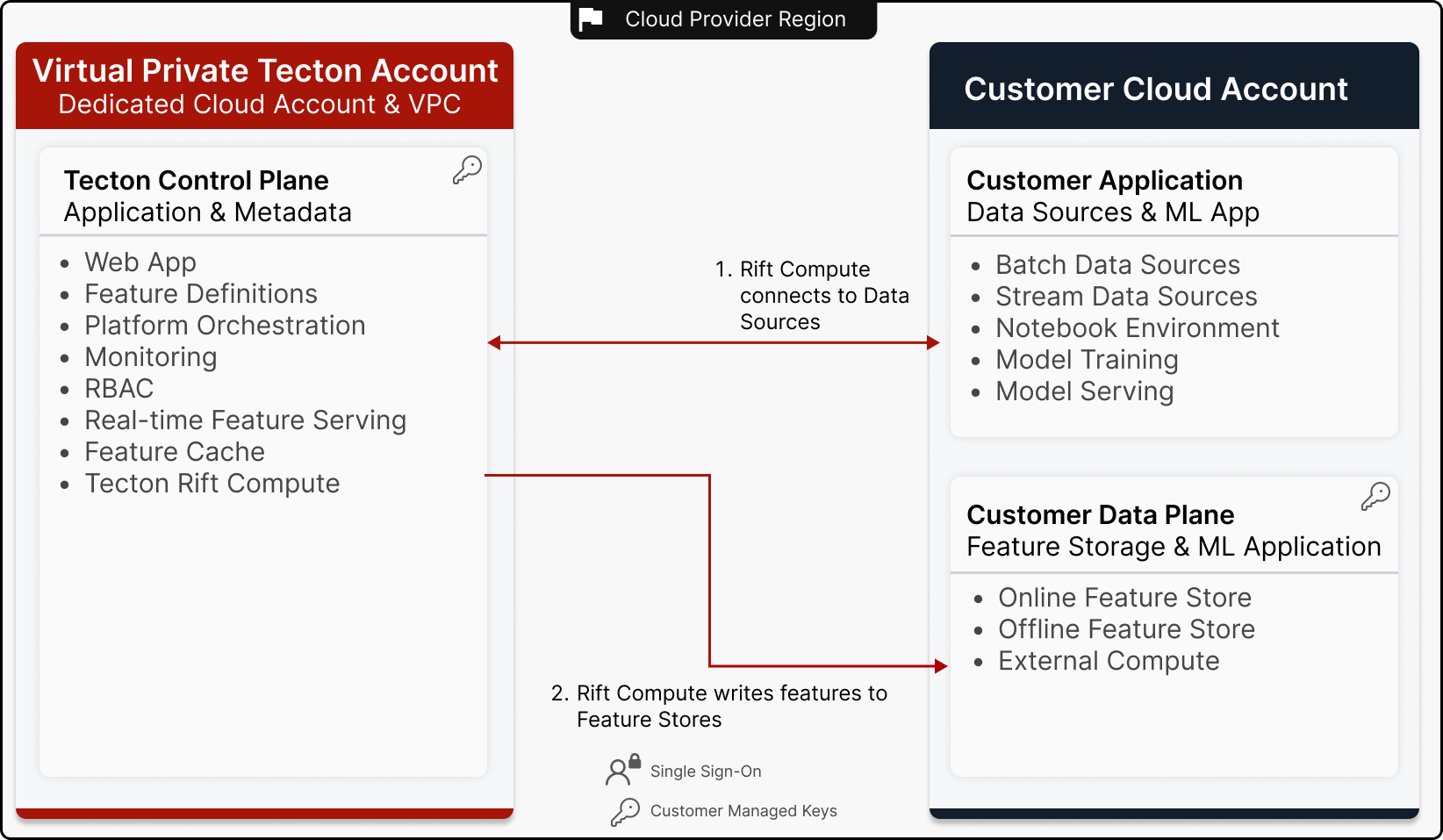

Deployment Architecture

The following diagram illustrates how a Tecton Deployment spans across both a Tecton Account and Customer Cloud Account.

Virtual Private Tecton Account

The Virtual Private Tecton Account is private because it runs in a dedicated Cloud Account & VPC.

The Tecton Control Plane hosts the metadata and orchestration services as well as the production infrastructure that serves features. It is also resonsible for computing real-time feature (during serving) and Rift streaming features that leverage the Stream Ingest API.

Customer Cloud Account

The Customer Cloud Account consists of:

- The Customer Data Plane, which includes the Online and Offline Feature Stores for the Tecton Account as well as Compute Providers where Tecton orchestrates feature processing jobs.

- The Customer Application, which provides the data sources for feature pipelines, and accesses features for ML training and inference.

Data Storage & Flows

Data Storage

The Virtual Private Tecton design enables customers to retain feature storage in their own Cloud Account. Additionally, users may configure the Tecton Serving Cache, in which features are cached in volatile memory for up to 24 hours.

The Tecton Control Plane only stores metadata needed to run the Tecton service, such as feature definitions, developer accounts, and access controls. The Control Plane is encrypted by default, and may optionally be encrypted with Customer Managed Keys.

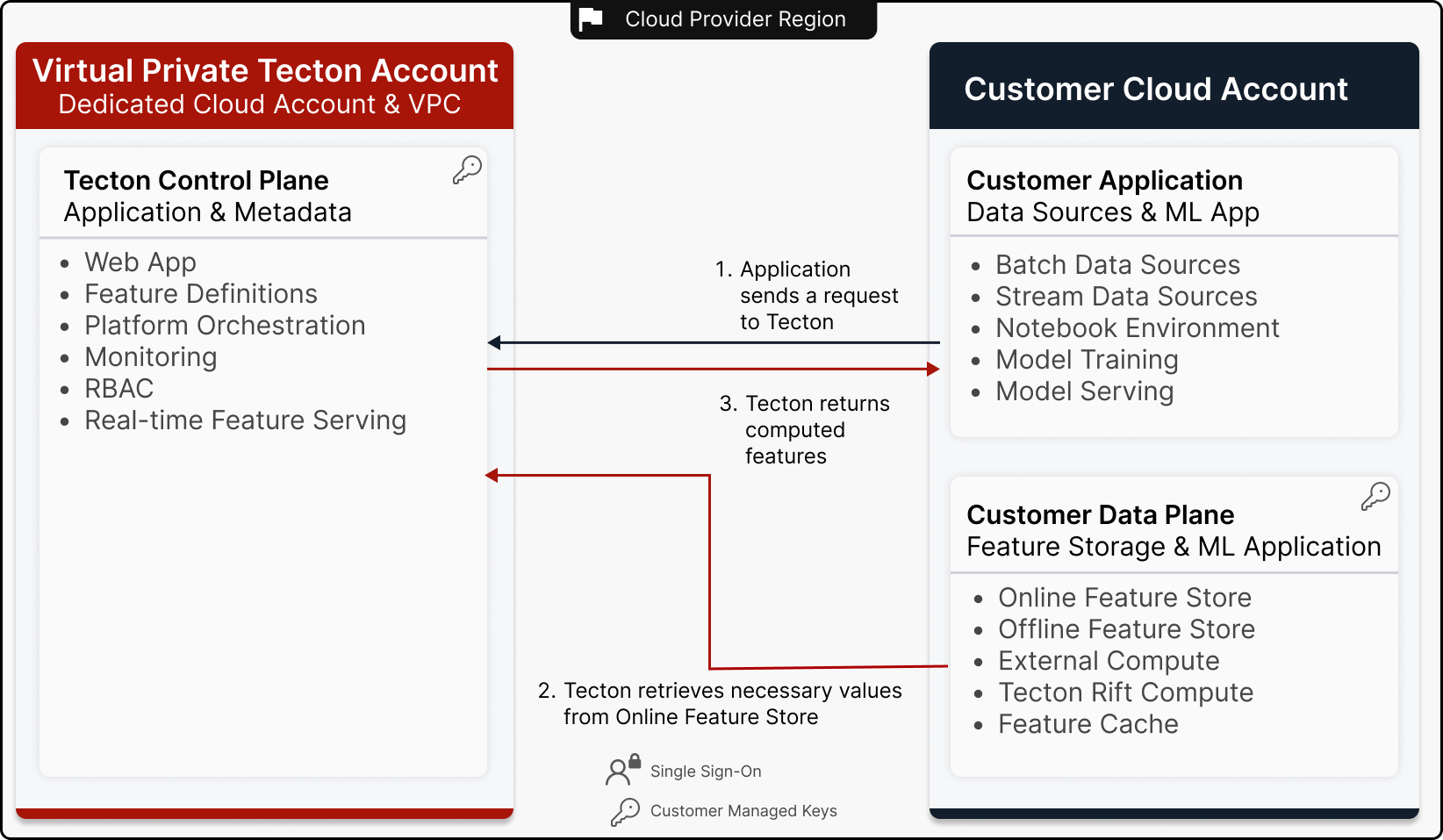

Online Feature Retrieval Data Flow

Online Feature Retrieval runs through the Feature Server in the Tecton Control Plane.

The diagram below illustrates the data flow for Online Feature Retrieval:

- The Customer Application initiates a feature access request to Tecton. This request is authenticated with a Tecton Principal, and that principal must have the appropriate access configured.

- The feature access request is fulfilled by the Feature Server. If necessary, the Feature Server will access data from the Feature Store using the credentials provided during Account Configuration.

- Tecton returns the final feature values to the Customer Application.

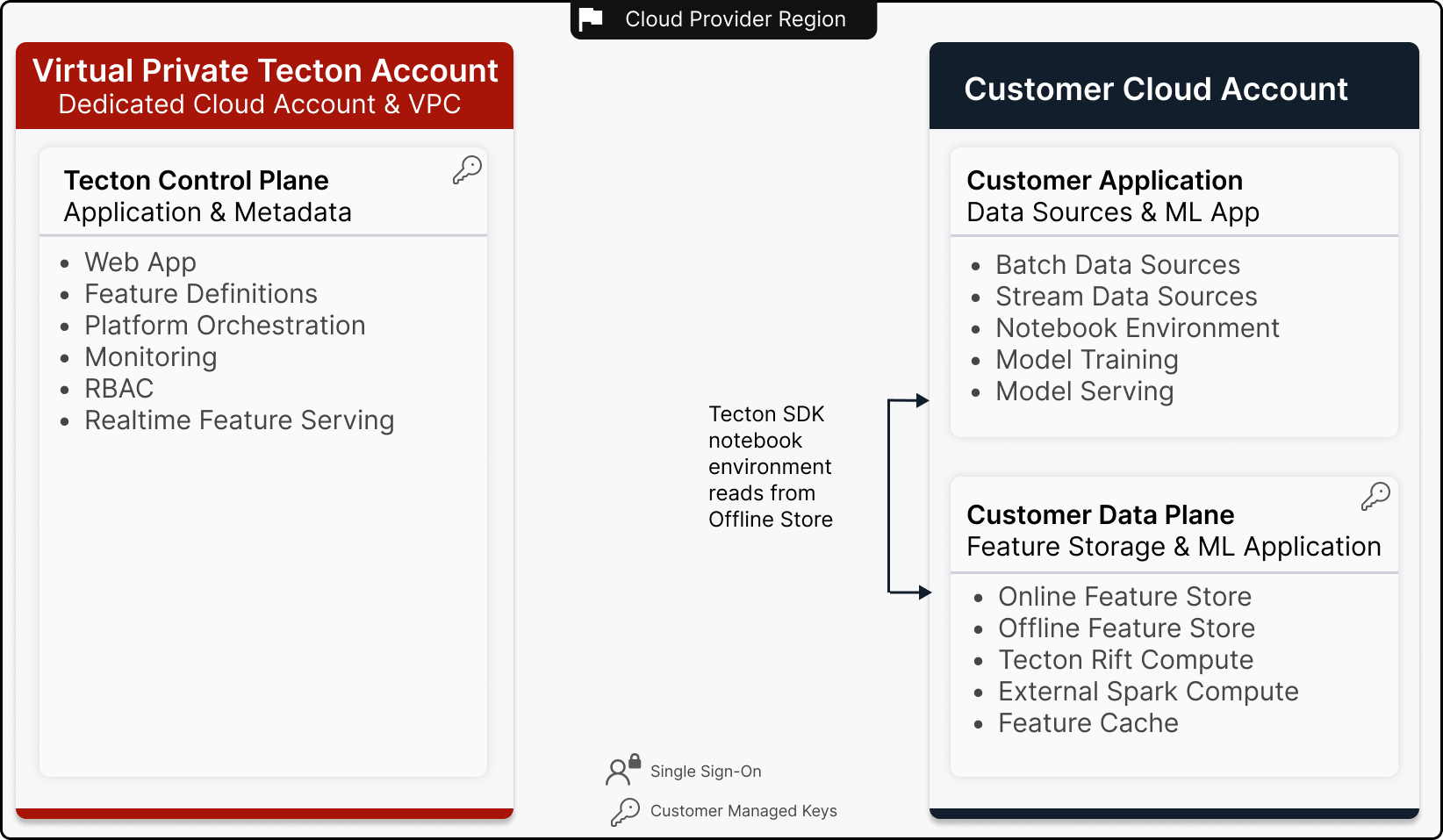

Offline Feature Retrieval Data Flow

Offline Feature Access is managed by the Tecton SDK. The Tecton SDK is available as a Python package, and typically installed in a Notebook environment.

To retrieve features for offline training and inference, the Tecton SDK will read data directly from the Offline Store.

When using Remote Dataset Generation , Tecton will instead run batch compute jobs as outlined below.

Batch Feature Compute Data Flow

Batch feature compute jobs are executed in the Customer Data Plane and write data to the Online and Offline Store in the Customer Data Plane. This applies to both Rift and External Compute providers (Databricks and EMR).

Stream Feature Compute Data Flow

For stream feature pipelines running on Rift which uses the Stream Ingest API, transformations are run in the Tecton Control Plane at ingestion time.

For stream feature pipelines running on External Compute (Databricks or EMR), transformations are executed in the Customer Data Plane as part of a managed Saprt Streaming job.

Realtime Feature Compute Data Flow

Realtime features are computed in the Tecton Control Plane when features are requested.

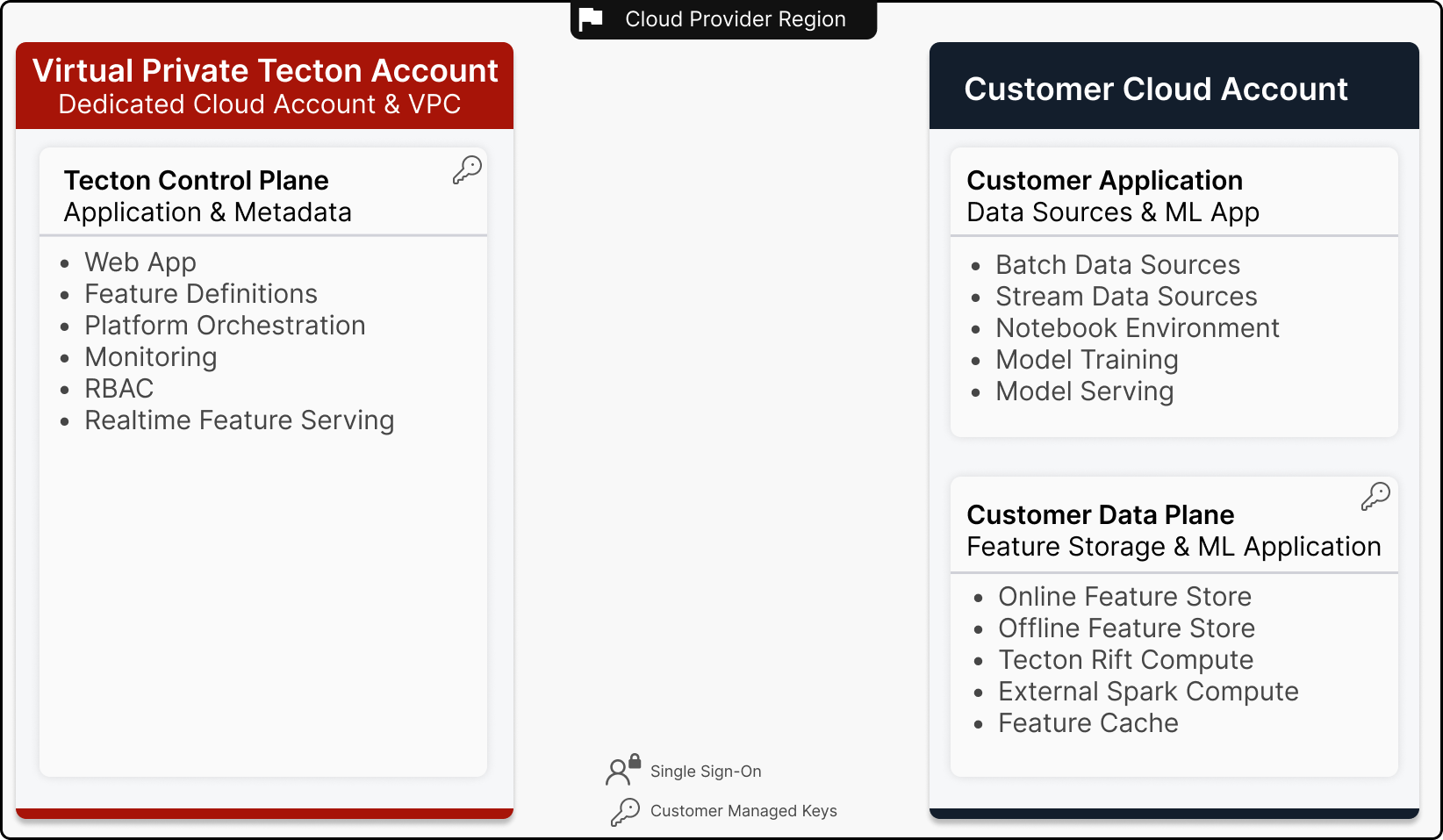

Hybrid Saas Deployment Alternative

Tecton also offers a hybrid SaaS deployment option. In this option, Rift batch compute jobs and the Tecton Feature Cache both run in the Tecton Control Plane. Online and Offline Feature Storage continues to live in the Customer Data Plane.

With this option, organizations can get started in minutes with a high performance, low maintenance Feature Platform.