Define Prompts

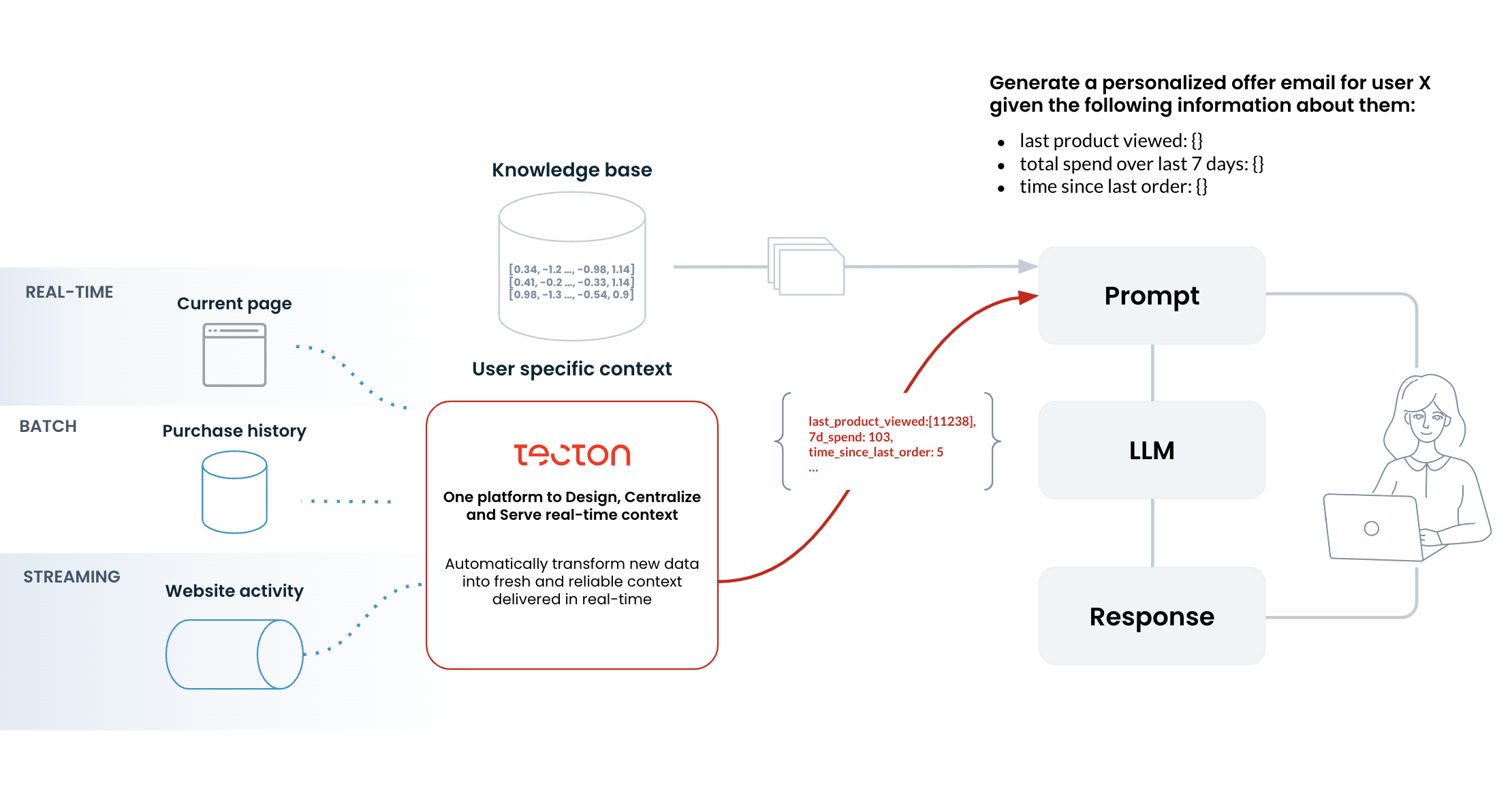

Tecton’s Prompt object enriches input for Large Language Models (LLMs) by integrating contextual feature data in real-time. By combining the power of FeatureViews from Tecton with LLMs, you can enhance prompt engineering, making responses more relevant, personalized, and actionable.

What is a Prompt?

A Prompt in Tecton is an object designed to enrich inputs for LLMs with dynamic, up-to-date data from Tecton's feature platform. By sourcing batch, streaming, or real-time data from FeatureViews, it provides a more personalized and contextual input to the model, which can enhance the quality of its responses.

Why use Prompts?

- Contextual Improvement: Prompts created with FeatureViews are enriched with real-time data, making them far more contextually relevant, improving LLM performance and output quality.

- Enterprise Ready: Prompts are hosted and versioned centrally, ensuring that Prompt definitions work reliably and the data sources remain up-to-date. Built in monitoring will alert a user if the definitions fail or data becomes stale.

- Backfilling with Historical Context: Tecton provides easy and efficient backfilling of Prompts with historical context, which enables more comprehensive evaluations and accurate model training or fine-tuning.

Defining a Prompt

Creating a Prompt in Tecton is simple. Using the @prompt decorator, you can

define a function that outputs a string prompt. This prompt can then be enriched

with data from FeatureViews or external request-time inputs.

Example: Simple Greeting Prompt

from tecton_gen_ai.fco import prompt

@prompt()

def greet_user():

"Generate a short, friendly greeting for a user to our website."

# Get the resulting prompt and then get the response from OpenAI:

generated_prompt = greet_user.run_prompt()

response = openai.Completion.create(model="gpt-3.5-turbo-instruct", prompt=generated_prompt)

This example creates a simple prompt that generates a greeting message. This prompt can later be expanded by integrating additional data from FeatureViews or request-time inputs.

Enriching Prompts with Request Data

You can enhance the prompt by integrating data provided at request time, such as user input or real-time contextual data.

Example: Enriching Prompts Using Request Data

In the following example, the greet_user prompt is enriched with a user's name and location passed in at request time:

from tecton_gen_ai.fco import prompt

from tecton_gen_ai.utils.tecton_utils import make_request_source

from tecton.types import String, Field

# Define schema for user input

request = make_request_source(user_name=str, location=str)

@prompt(sources=[request])

def greet_user(request_data):

return f"Hello, {request_data['user_name']}! How's the weather in {request_data['location']}?"

This prompt is now personalized, addressing the user by name and referring to their location. To run the prompt against OpenAI's ChatGPT 3.5 model, use the openai python package:

from openai import Completion

generated_prompt = greet_user.run_prompt({"request": {"user_name": "Alice", "location": "Oklahoma"}})

response = Completion.create(model="gpt-3.5-turbo-instruct", prompt=generated_prompt)

Using FeatureViews to Enrich Prompts

Tecton allows you to use feature data to further enrich your prompts. You can access features such as user preferences, past behavior, and historical data in real-time.

Example: Personalized Recommendations with FeatureViews

Let’s enrich a prompt that provides restaurant recommendations by using the user’s historical preferences stored in Tecton FeatureViews.

In this example we have a Feature View user_info that contains information we

have stored about the user, such as their name and food preferences. We use the

request context to find their current location, and combine the information to

generate a suggestion for a restaurant location:

from tecton_gen_ai.fco import prompt

from tecton_gen_ai.utils.tecton_utils import make_request_source

from tecton.types import String, Field

# Define the schema for input from the browser

location_request = make_request_source(location=str)

# Define a Prompt that uses feature views to generate a personalized offer email

@prompt(sources=[location_request, user_info])

def sys_prompt(location_request, user_info):

name = user_info["name"]

food_preference = user_info["food_preference"]

location = location_request["location"]

return f"""

You are a consierge service that recommends restaurants.

You are serving {name}. Address them by name.

Respond to the user query about dining.

If the user asks for a restaurant recommendation respond with a specific restaurant that you know and suggested menu items.

Suggest restaurants that are in {location}.

If the user does not provide a cuisine or food preference, choose a {food_preference} restaurant.

"""

For a more step-by-step example of how to use Prompt, see the Contextualized Prompts Tutorial.