Tecton Concepts

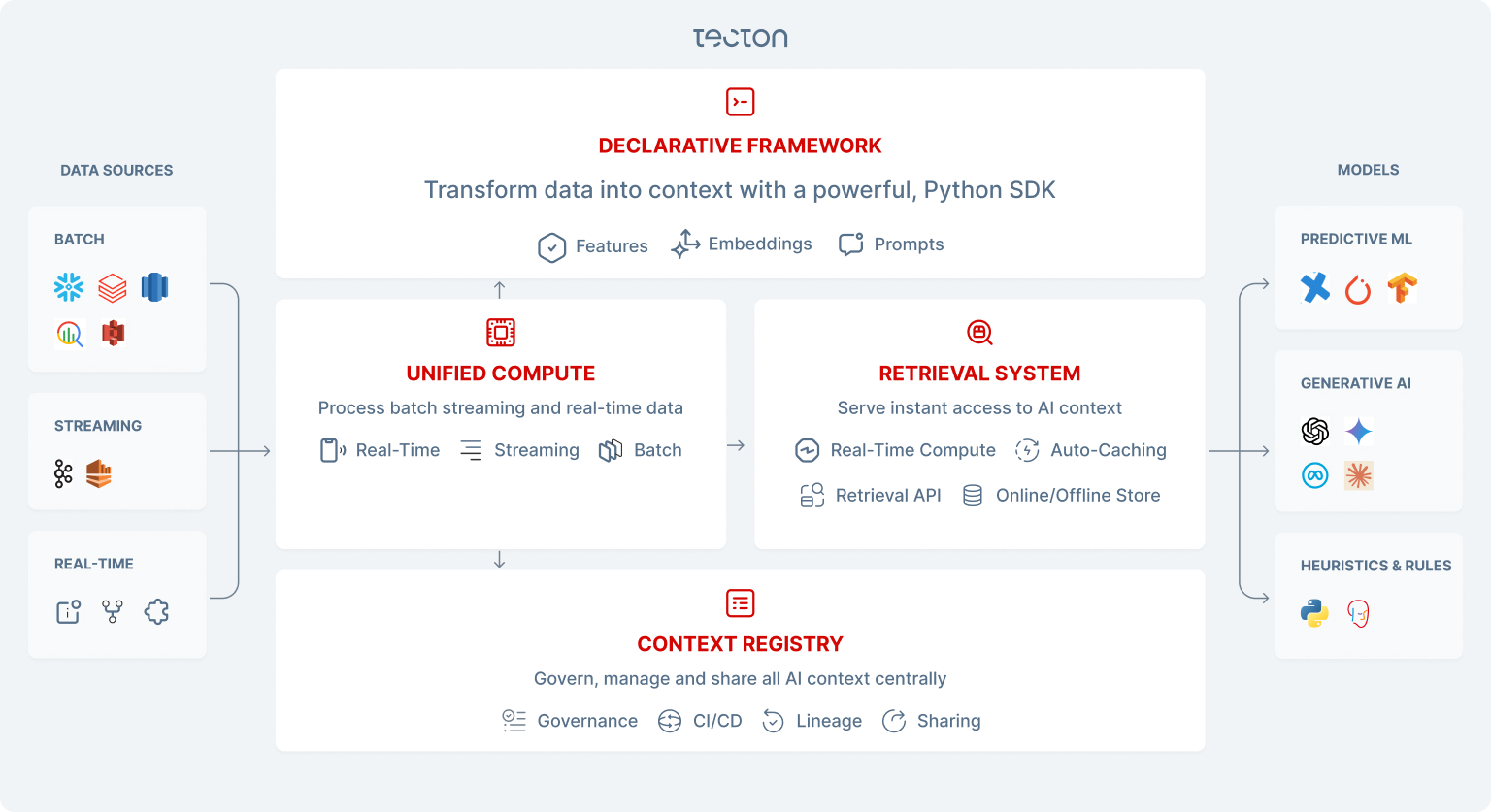

Tecton is an AI Data Platform for the enterprise.

At a high level, Tecton abstracts away the complex engineering needed to transform, manage, store, and retrieve data as context (i.e. features, embeddings, prompts) for productionizing AI applications.

The Tecton platform is composed of the following components:

- Declarative Framework: SDK for defining context-as-code and enforcing production best practices battle-tested across trillions of AI predictions at leading companies.

- Unified Compute: Compute and orchestration layer for seamless derivations of common and complex AI context types: Features, Embeddings, Prompts — from all sources of data across Batch, Streaming, and Realtime.

- Retrieval System: System for optimal, low-latency, high throughput storage and serving of context to AI models.

- Context Registry: Tool suite for governance, comprehensive security, collaboration, and monitoring of context.

A Feature Store is a system designed to store and serve feature values for AI applications.

An AI Data Platform addresses the full range of challenges in building and maintaining features, embeddings, and prompts for operational AI applications. In addition to storing and serving context, AI Data Platforms have support for defining, testing, orchestrating, monitoring, and managing all types of context.

Key Tecton Concepts

Tecton Repository

A Tecton Repository is a collection of Python files containing Tecton Object Definitions, which define feature, embedding, or prompt pipelines and other dataflows within Tecton's framework. These repositories are typically stored in a Source Control Repository, like Git, enabling version control and collaboration.

Workspace

Workspaces are remote environments where Tecton Object Definitions are applied and turned into data pipelines and services orchestrated and managed by Tecton.

When a Tecton Repository is

applied to a

workspace using the tecton apply command via the Tecton CLI or

a CI/CD pipeline, the Object Definitions within the repository update the

Workspace Configuration. The Workspace Configuration encompasses the set of

Tecton Object Definitions currently active in a workspace.

It's common for different teams and organizations to have their own workspaces set up with their own access controls.

There are two types of workspaces:

-

Live:

Live Workspaces generate real-time endpoints accessible in the production environment. These workspaces utilize data infrastructure resources, which can lead to significant infrastructure costs. They are intended for serving production applications and can also be used to create staging environments for testing feature or embedding pipelines before moving to production.

Feature and embedding definitions that are applied to a live workspace will begin materialization according to the materialization configuration of the Feature Views (e.g.

online=Trueoroffline=True). -

Development:

Development Workspaces are used for development and testing purposes. These workspaces do not connect to the production environment or serve in real-time or materialize data (regardless of the materialization configuration), thus avoiding substantial infrastructure costs. Features and embeddings in development workspaces are discoverable via the Web UI and can be fetched and run ad-hoc from a notebook for testing.

Materialization

Materialization is the process of precomputing feature or embedding data by executing a data pipeline and then publishing the results to the Online or Offline Store. Materialization enables low-latency context retrieval online and faster offline retrieval queries.

Materialization can be scheduled by Tecton or triggered via an API.

Compute Engines

Tecton comes with a built-in compute engine called Rift which can compute batch, stream, and real-time features, embeddings, and prompts consistently online and offline all using vanilla Python and SQL. Rift can also optionally plug into data warehouses like Snowflake or BigQuery and push compute down to the warehouse when appropriate.

Tecton can also leverage Spark for computing batch and stream features or generating training data. Tecton connects to data platforms like AWS EMR and Databricks to execute and manage Spark jobs.

Offline Store

The Offline Store in Tecton is used for staging the results of intermediate transformations in order to speed up the retrieval of historical offline context values. Full offline context values can be optionally published to your data warehouse.

Online Store

The Online Store is a low-latency key-value store that holds the latest values of pre-computed features, embeddings, or prompts. It is located in the online environment and is used to look up context for online inference.

Feature Server

The Feature Server is a managed Tecton service that fetches real-time feature values from the Online Store, running real-time feature transformations, and returning feature vectors via an HTTP API.

Server Groups

Server Groups provide a way to isolate and scale the serving infrastructure for different feature services. Each server group can be configured with a different desired number of nodes and autoscaling policies. There can be two different kinds of server groups:

- Feature Server Groups: These server groups are responsible for reading and serving feature vectors from the online store.

- Transform Server Groups: These server groups are responsible for running real-time feature view transformations.

Monitoring and Alerting

There are two types of monitoring that Tecton manages:

- System Monitoring: Tecton monitors for job health, service uptime, and request latencies.

- Data Quality Monitoring (Public Preview): Tecton monitors the quality of the data generated by feature pipelines, as well as for data drift in features between training and serving.

Tecton has built in alerting for system-related and data-quality-related issues.

Tecton Product Interfaces

Tecton SDK

Tecton's Python SDK is used for:

- Defining and testing feature, embedding, and prompt pipelines

- Generating datasets for training and batch inference

Tecton Web UI

The Tecton Web UI allows users to browse, discover, and manage feature and embedding pipelines. In the web UI you can also:

- Understand lineage

- Configure access controls

- Manage users

- Check the status of backfills

- Monitor, cancel, and re-run materialization jobs

- Understand data quality

- Monitor online Feature Services

Each Tecton account is associated with a URL (e.g. <your-account>.tecton.ai)

and contains all of the workspaces created in that account.

Tecton CLI

The

Tecton CLI

is primarily used for

managing workspaces,

applying feature repository changes to a workspace

(via the tecton apply command), and creating API keys.

Tecton HTTP API

Tecton's HTTP API is used for retrieving feature vectors at low-latency online in order to power online model inference.