Create and Manage Datasets

Tecton Datasets allow for conveniently saving feature data that can be used for model training, experiment reproducibility, and analysis. Datasets are versioned alongside your Feature Store configuration, allowing you to inspect and restore the state of all features as of the time the dataset was created. Tecton Datasets can be created in two ways:

- Saving training DataFrames that are requested from Feature Services

- Logging online requests to a Feature Service

Saved Training DataFrames

Tracking feature training DataFrames as Tecton Datasets has a number advantages:

- Datasets are tracked and catalogued in one central place.

- Datasets are identified with a single string, which you can store alongside other model parameters in model metadata stores such as MLFlow.

- When you save a Dataset, Tecton stores both the data and the metadata associated with the features (eg, data sources, transformation logic) - allowing you to track the full lineage of a Dataset.

To create a Dataset from historical data, use Remote Dataset Generation.

After a Dataset Job is completed, a Dataset will be available in the Web UI and via SDK:

dataset = ws.get_dataset("my_training_data")

Logged Online Requests

Feature Services have the ability to continuously log online requests and feature vector responses as Tecton Datasets. These logged feature datasets can be used for auditing, analysis, training dataset generation.

To enable feature logging on a Feature Service, simply add a LoggingConfig like

in the example below and optionally specify a sample rate. Then run

tecton apply to apply your changes.

from tecton import LoggingConfig

ctr_prediction_service = FeatureService(

name="ctr_prediction_service",

description="A Feature Service used for supporting a CTR prediction model.",

features=[ad_ground_truth_ctr_performance_7_days, user_total_ad_frequency_counts],

logging=LoggingConfig(sample_rate=0.5),

)

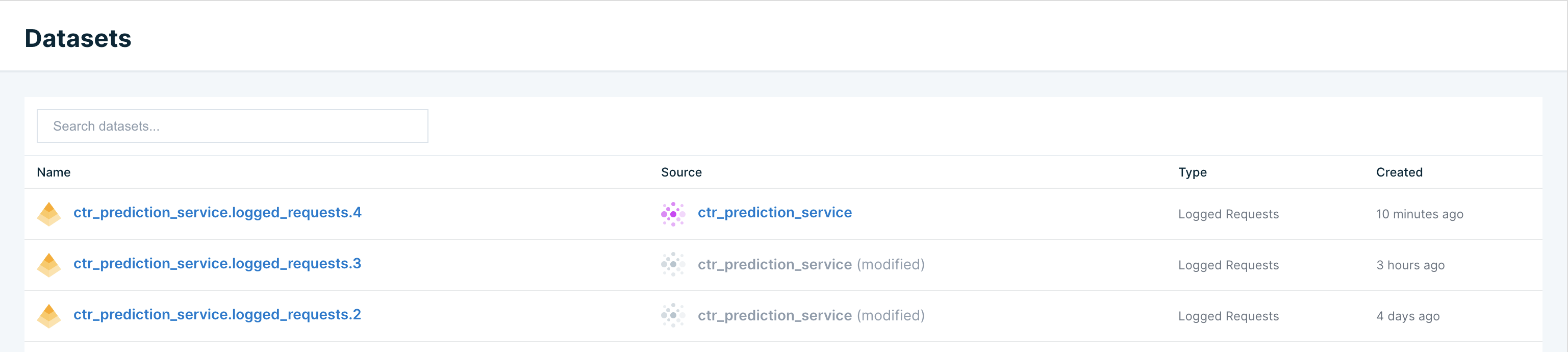

Within 60 seconds, this will create a new Tecton Dataset under the Datasets tab

in the Web UI. This dataset will continue having new feature logs appended to it

every 30 mins. If the features in the Feature Service change, a new dataset

version will be created. Datasets are named with the following convention:

<Feature Service name>.logged_requests.<Version>. The Dataset with the highest

version number for a Feature Service will be the latest active dataset.

Interacting with Datasets

Datasets can be fetched by name using the code snippet below:

import tecton

ws = tecton.get_workspace("prod")

my_training_data = ws.get_dataset("my_training_data")

# View my_dataset as a Pandas DataFrame

my_training_data.to_dataframe().to_pandas().head()

Fetch a Dataset's Events DataFrame

All Tecton Datasets contain a reference to their "events DataFrame", which contains the join keys and request data used to generate feature vectors.

If the Dataset was saved during training data generation, then this DataFrame

was passed to Tecton during the call to get_features_for_events(...).

In the case of a logged requests Dataset, this DataFrame is the accumulated list of online requests to the Feature Service.

To fetch a Dataset's events DataFrame, run the following code in a notebook:

import tecton

ws = tecton.get_workspace("prod")

dataset_events = ws.get_dataset("my_training_data").get_spine_dataframe()

dataset_events.to_pandas().head()

This can be used as input to reproduce a Dataset from scratch, or test out new features.

Deleting Datasets

You can delete the dataset using the

workspace.delete_dataset('my_training_data') method. The underlying data will

be cleaned up from S3 and the dataset record will not appear in lookups. Please

note, for Logged datasets, feature logging on the Feature Service needs to be

disabled before deleting the dataset.

import tecton

ws = tecton.get_workspace("prod")

ws.delete_dataset("my_training_data")