Read Feature Data for Prompt Engineering

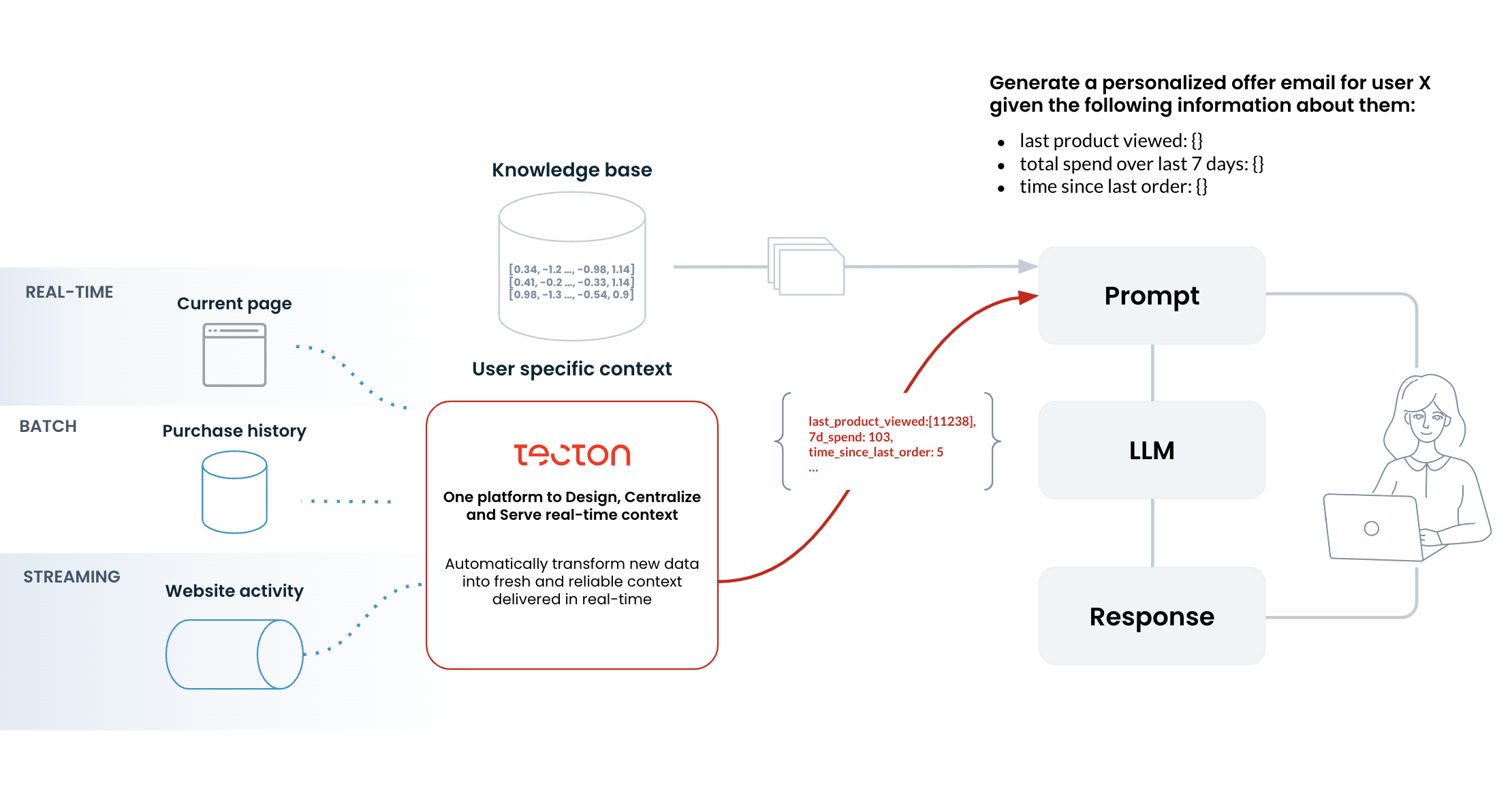

This section covers how to read feature data from Tecton for prompt engineering for generative AI.

Generate Contextual Prompts with On-Demand Feature Views

A contextual prompt is created by leveraging an On-Demand Feature View (ODFV) to read features for prompt engineering. Using feature view dependencies on batch and streaming features, an ODFV can fill pre-defined prompt templates with rich context in realtime as customer events unfold.

Here's an example showing prompt engineering for a Large Language Model that generates a personalized offer email for a user based on their activity:

from tecton import RequestSource, on_demand_feature_view

from tecton.types import String, Timestamp, Float64, Field, Bool, String

from user.features.stream_features.product_interactions import (

last_product_viewed,

last_order_metrics,

)

from user.features.batch_features.weekly_spend import (

weekly_spend,

)

request_schema = [Field("user_name", String)]

offer_email_request = RequestSource(schema=request_schema)

output_schema = [Field("offer_email_prompt", String)]

@on_demand_feature_view(

sources=[offer_email_request, last_product_viewed, last_order_metrics, weekly_spend],

mode="python",

schema=output_schema,

description="Contextual prompt for offer generation email",

)

def offer_email_prompt(offer_email_request, last_product_viewed, last_order_metrics, weekly_spend):

offer_email_prompt_template = """\

Generate a personalized offer email for user {name} given the following information about them:

last product viewed: {last_product_viewed}

total spend over last 7 days: {last_week_spend}

time since last order: {time_since_last_order}

"""

offer_email_prompt_with_context = offer_email_prompt_template.format(

name=offer_email_request["user_name"],

last_product_viewed=last_product_viewed["product_name_last_7d_10m"],

last_week_spend=weekly_spend["amt_sum_7d_1d"],

time_since_last_order=last_order_metrics["time_since_last_order"],

)

return {"offer_email_prompt": offer_email_prompt_with_context}

Retrieve generated prompts

A Feature Service is used for retrieving generated prompts for training and online serving. The following example defines a Feature Service which can retrieve the contextual prompt for the above use case of sending a personalized offer email for a user based on their activity:

from tecton import FeatureService

from user.features.stream_features.product_interactions import (

last_product_viewed,

last_order_metrics,

)

from user.features.batch_features.weekly_spend import (

weekly_spend,

)

from user.features.on_demand_features.offer_email_prompt import (

offer_email_prompt,

)

offer_email_context_service = FeatureService(

name="offer_email_context_service",

description="A Feature Service used for realtime context generation for sending user emails",

online_serving_enabled=True,

features=[last_product_viewed, last_order_metrics, weekly_spend, offer_email_prompt],

)

Once a Feature Service is created, it can be used for inference using several methods described in Read Feature Data for Inference. Here's an example of using the HTTP API to retrieve the generated prompt:

$ curl -X POST https://<your_cluster>.tecton.ai/api/v1/feature-service/get-features\

-H "Authorization: Tecton-key $TECTON_API_KEY" -d\

'{

"params": {

"workspace_name": "prod",

"feature_service_name": "offer_email_context_service",

"join_key_map": {

"user_id": "C1000262126"

},

"request_context_map": {

"user_name": "John Doe"

}

}

}'

Response

{

"result": {

"features": ["blue mens tshirt", "5 days", "$103", "Generate a personalized offer email for user John Doe given the following information about them:

last product viewed: blue mens tshirt

total spend over last 7 days: $103

time since last order: 5 days"]

}

}

The retrieved contextual prompt can then be passed to OpenAI, Anthropic, or any other LLM of choice to obtain the personalized offer email. Here's an example with OpenAI:

response = openai.Completion.create(

model="gpt-3.5-turbo-instruct", prompt=get_features_response["result"]["features"][3]

)

Response

{

"id": "cmpl-uqkvlQyYK7bGYrRHQ0eXlWi7",

"object": "text_completion",

"created": 1589478378,

"model": "gpt-3.5-turbo-instruct",

"choices": [

{

"text": "Subject: Exclusive Offer: 15% Off Your Next Purchase, John!

Hi John,

We noticed your interest in our blue men's t-shirt. As a valued customer,

we have an exclusive offer just for you! Enjoy 15% off your next purchase

for the next 48 hours. Use code `EXCLUSIVE15` at checkout.

It's our way of saying thanks for your loyalty.

Hurry, this offer won't last long! Shop now and save.

Any questions? Our team is here to help. Just reach out to us.

Thanks again for choosing us, John. Happy shopping!",

"index": 0,

"logprobs": null,

"finish_reason": "length"

}

],

"usage": {

"prompt_tokens": 5,

"completion_tokens": 7,

"total_tokens": 12

}

}